The Most Important Technological Advancements in History

Technology is the key to a better way of life. Our ancient ancestors knew that, so they shaped stone tools to craft, cut, and harvest. They observed the destructive power of nature and learned to cook with fire. Then, we moved on wheels until we flew with wings. We channeled electricity into glass bulbs and shined light into the darkness. Our greatest scientists learned to divide atoms, but they still are trying to fuse them. Each of these advancements has made humanity more remarkable over time, and future concepts and prototypes will be invaluable in improving the lives of the next generation. These are the most significant creations that inventors and geniuses have left on the world.

| Technology | Year Invented | Impact On History |

|---|---|---|

| Primitive Advancements | (3.3 mya - 5200 BC) | Mastery of stone tools, fire, and wheels. |

| Early Writing Systems | 6600 BC - 3200 BC | Development of the first forms of writing. |

| Metallurgy Beginnings | 5500 BC | Introduction of metal smelting and alloy formation. |

| Steam Engines | 1712 AD | Engine that sparked the Industrial Revolution. |

| Discovery of Electricity | 1752 AD | Initial study and harnessing of electricity. |

| Automobiles | 1886 AD | Development of the modern car and internal combustion engine. |

| Airplanes | 1903 AD | Wright brothers' creation of the first airplane. |

| Particle Accelerators | 1929 AD | Advancements in high-speed particle collisions. |

| Nuclear Fission | 1938 AD | Discovery of splitting atomic nuclei for energy. |

| Computer Revolution | 1945 AD | Emergence of digital, programmable computing. |

| Artificial Intelligence | 1956 AD | Onset of machines mimicking human cognitive functions. |

| Space Exploration | 1957 AD | Key events in human space travel and satellite deployment. |

| Modern Medicine | Current | Key medical breakthroughs like MRI and CRISPR. |

| Future Technologies | Ongoing | Emerging fields like quantum computing and nuclear fusion. |

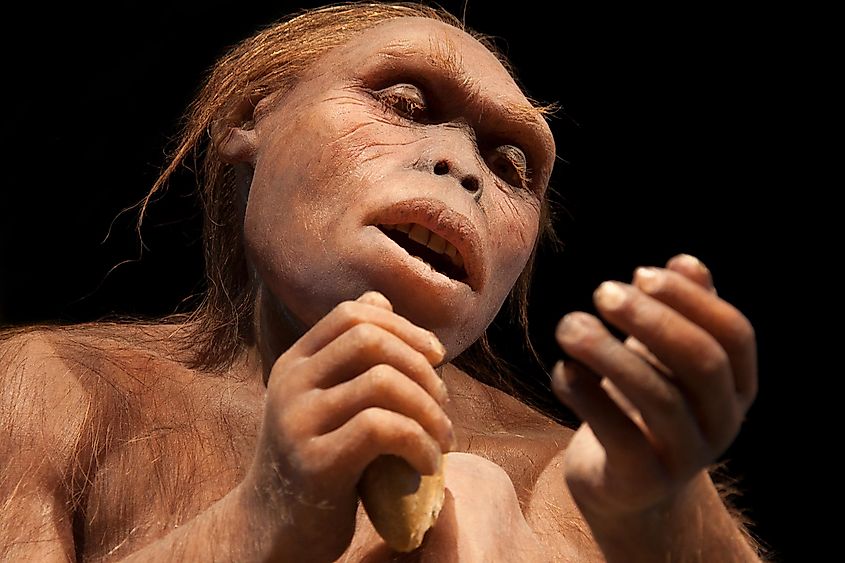

Primitive Advancements (3,300,000 BC- 5200 BC)

Around 3.3 million years ago, "Australopithecus afarensis," an ancestor of humans known as 'Lucy's species,' began using stone tools and consuming meat, with evidence of toolmaking dating back at least 2.6 million years. Early humans developed a method to form blades from stone flakes and attached these blades to wood or bones with plant fibers. Then, 1.7 to 2.0 million years ago, we combined our ability to wield tools (essential for hunting and building) with fire after observing naturally occurring wildfires. Mastering cooking and smoking was necessary for the safe consumption and conservation of meat and for surviving harsh winters. Finally, our ancestors developed the wheel in 5200 BC (over seven thousand years ago) and used it primarily for pottery. Still, it became essential for transport by 3300 BC and became commonplace with the advent of the chariot in 2200 BCE. Today, humans still use all of these technologies.

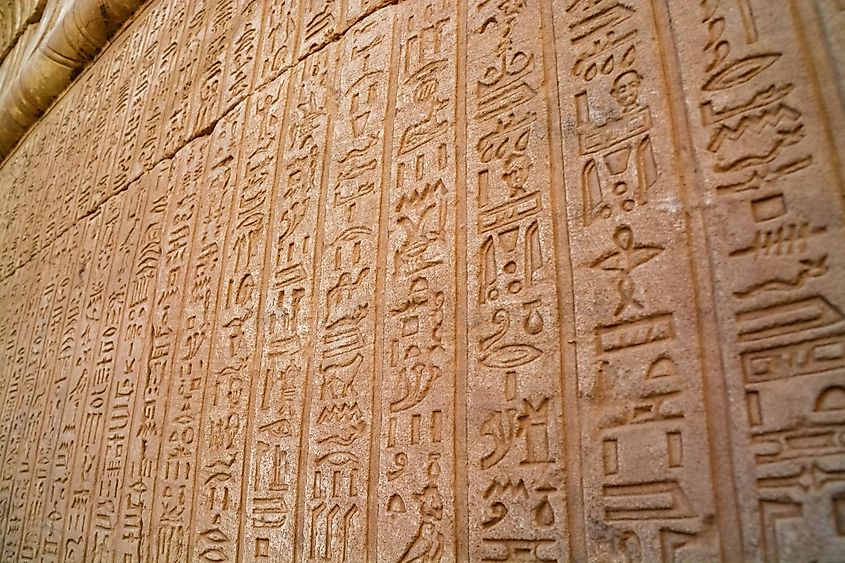

Early Writing Systems (6600 BCE - 3200 BCE)

The first known examples of abstract shapes traceable to an ancient writing system are the Jiahu symbols, carved on tortoise shells in Jiahu about 6600 BC, the Vinča symbols carved into the Tărtăria tablets), dating back to 5300 BCE, and the Indus script, going back to 3300 BC. Thanks to these examples, we can safely say that the first writing systems of the Early Bronze Age were not a sudden invention. Around 3200 BC, Sumerians were likely the first to forge the first complete written language in Mesopotamia, which they inscribed on clay tablets. Experts once assumed Egyptian hieroglyphs stemmed from the Sumerian language, but they now believe the system developed independently.

If we turn our attention back to more recent times, it is clear that written language has evolved considerably throughout the whole world. With the advent of the printing press in Germany, around 1440, the talented goldsmith Johannes Gutenberg started the printing revolution. This innovation allowed distributors to leave tired and ancient manuscripts behind, as books and papers could now be copied en masse, allowing for the widespread dissemination of information amongst the public.

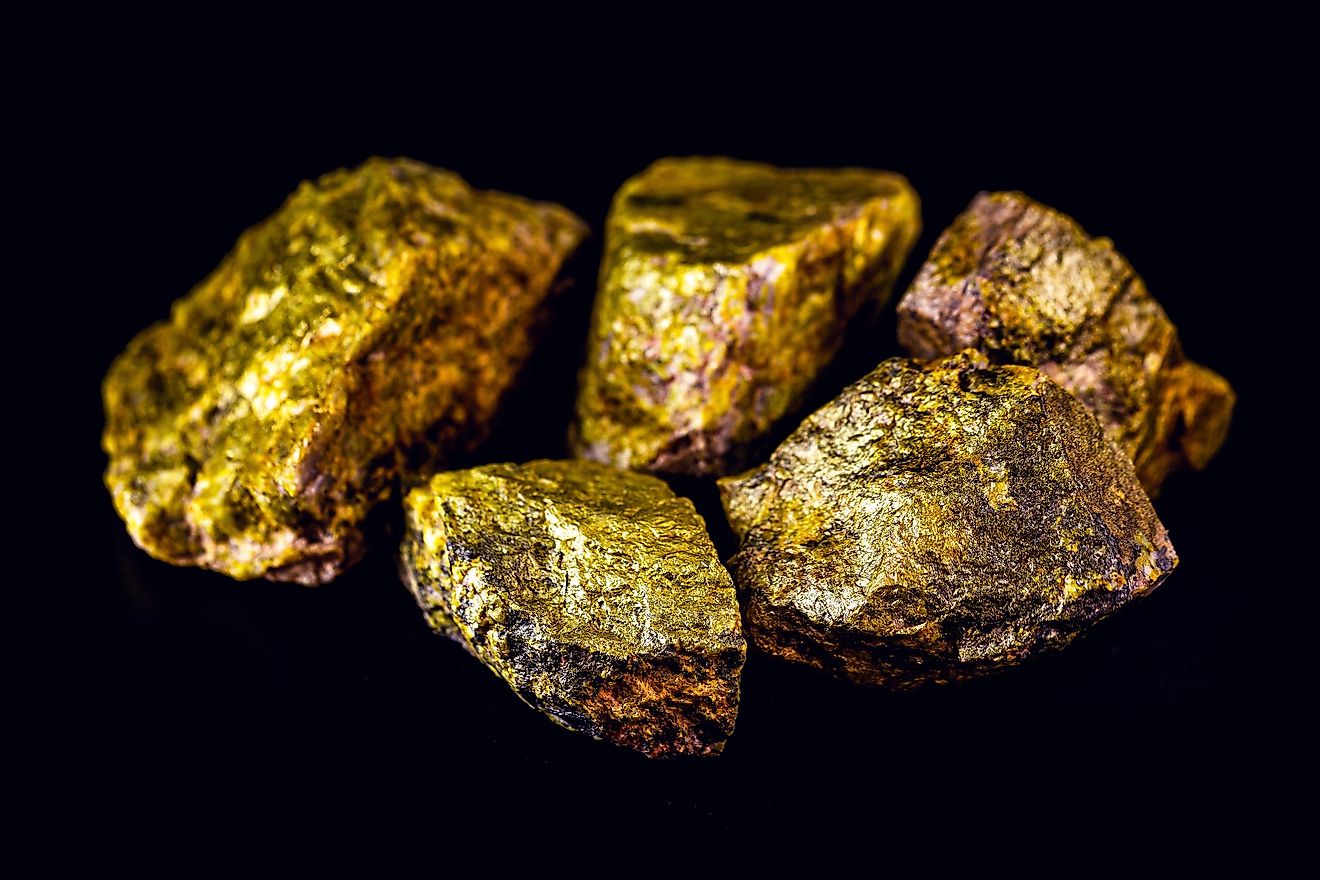

Metallurgy (5500 BCE)

The ability to control fire and melt metallic ores was an astounding advancement in human history. This skill enabled the extraction of metals from their ores, marking the transition into the Bronze and Iron Ages. Archeologists and geologists found a copper axe, the earliest evidence of copper smelting, at the Belovode site near Pločnik, belonging to the Vinča culture and dating to 5500 BC. Alloys, which were made by implementing mixtures of metals into the metalworking process, combined copper with other ores like tin, silver, and iron. As such, the first alloy age was the Bronze Age, which was about 3300 BC. The extraction of iron into a workable metal was a lot harder, and the Iron Age only began in approximately 1200 BC. With the much more challenging implementation of the addition of carbon, metal-workers achieved one of the most essential alloys as early as 1800 BC: steel. All steels contain carbon, between 0.002% and 2,14%, and become stainless when chromium mixes in and its content exceeds 11%. The advantage of steal, compared to iron, is its light weight and flexibility. Last, a more regular use of steel is apparent in the era of the Roman Empire, due to the infrastructure needed to produce it.

Steam Engines (1712)

The steam engine is an external combustion engine that uses coal as fuel and steam as its working fluid. Like many engines, the pressure from steam is converted into labor, which can be used to force the wheels of trains to move, through rods like pistons or rolling turbines. There is a record of a rudimentary example of a steam engine, dubbed the 'aeolipile,' described by a prominent Greek mathematician in the 1st century AD. Further rudimentary steam turbine devices were described by polymath Taki al-Din in 1551 and Italian engineer and architect Giovanni Branca in 1629. Yet, in 1712, English inventor Thomas Newcomen invented a steam engine that could power iconic 19th-century trains, and successors improved the design by 1764. This substantial technological advancement brought semi-automated factories to life, as well as long-range and fast goods delivery, and sparked the start of the Industrial Revolution.

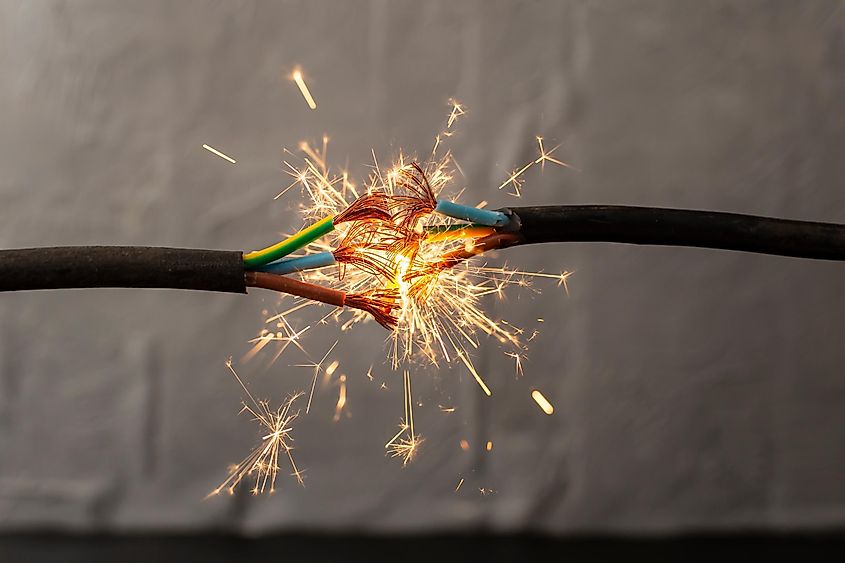

Discovery of Electricity (1752)

Like fire, electricity is a natural phenomenon that does not need inventing. American polymath Benjamin Franklin is the most credited scientist for investigating it in 1752 by attaching a wire to a kite during a thunderstorm. However, scientists like William Gilbert of the 1600s and Thales of Miletus of the 6th century BC had studied it. Regarding importance, it is arguable that the study, and ultimately the mastery, of electricity is foundational to all successive technological advances.

Thanks to electricity, 1837 saw the first example of the electrical telegraph invented by English inventor William Fothergill Cooke and English scientist Charles Wheatstone. This revolutionary form of communication quickly evolved into wireless thanks to the new radio wave transmission engineered by Guglielmo Marconi in 1894. Then, Morse code was adopted shortly after its invention by Samuel Morse in 1838, which left behind the first needles-based telegraph. The first transmission of Morse from Washington to Alfred Vail in Baltimore cited the Bible: "What hath God wrought."

Like the discovery of electricity, the invention of the lightbulb was a process that took nearly a century—and it only partially began with Thomas Edison. He acquired some of his predecessors' patents, learned from their mistakes, and invented an imperfect lightbulb in 1879. Lasting only 40 hours, Italian inventor Alessandro Cruto took two more years to achieve 500 hours in 1881 to get closer to modern incandescent lightbulbs that lasted over 1000 hours and are now typically ignored in favor of LED.

Automobiles (1886)

The development of the automobile started in 1672, with the first models still reliant on steam power. Then, Isaac de Rivaz created a revolutionary new engine, one of the first examples of an internal combustion engine, in 1804. This design allowed engineers to build the first prototype automobile in 1807. Carl Benz put the first car into series production, which appeared in 1886 in minimal amounts. Later automobile production focused on the Ford Model T, assembled in the Ford Motor Company's factories. Ford launched this version in 1908 and successfully brought the personal-vehicle concept to a broad audience for the first time. Compared to the first Benz car's top speed of 10 mph, the fastest cars currently can reach over 330 mph. Today, internal combustion engine-powered automobiles are far from obsolescence despite the rise of electric vehicles; thus, the technology is alive and kicking.

Airplanes (1903)

Throughout history, the quest for flight has been a remarkable journey. Ancient tales and Greek legends, such as those of Icarus and Daedalus, have inspired generations. Similarly, Leonardo da Vinci's meticulous studies on the wing designs of birds marked a significant leap in understanding aerodynamics. This quest continued through pioneers like Jean Marie Le Bris, who, in 1868, made a daring attempt to soar with his glider. Yet, it was not until the Wright brothers, Orville and Wilbur Wright, emerged that the dream of manned flight became a reality. In 1903, they achieved a groundbreaking feat by inventing and piloting the first airplane, a biplane. The biplane needed two pairs of wings to support the fuselage and bring it up in the air, and it was "the first sustained and controlled heavier-than-air powered flight."

In the following years, the Wright brothers developed their airplane to allow it to run longer and with more aerodynamic efficiency. Using a small wind tunnel they built, the Wrights collected more accurate data, enabling them to design more efficient wings and propellers to achieve their goals. Thanks to their efforts and many others, commercial airliners can now comfortably move between countries at speeds as high as Mach 0.92.

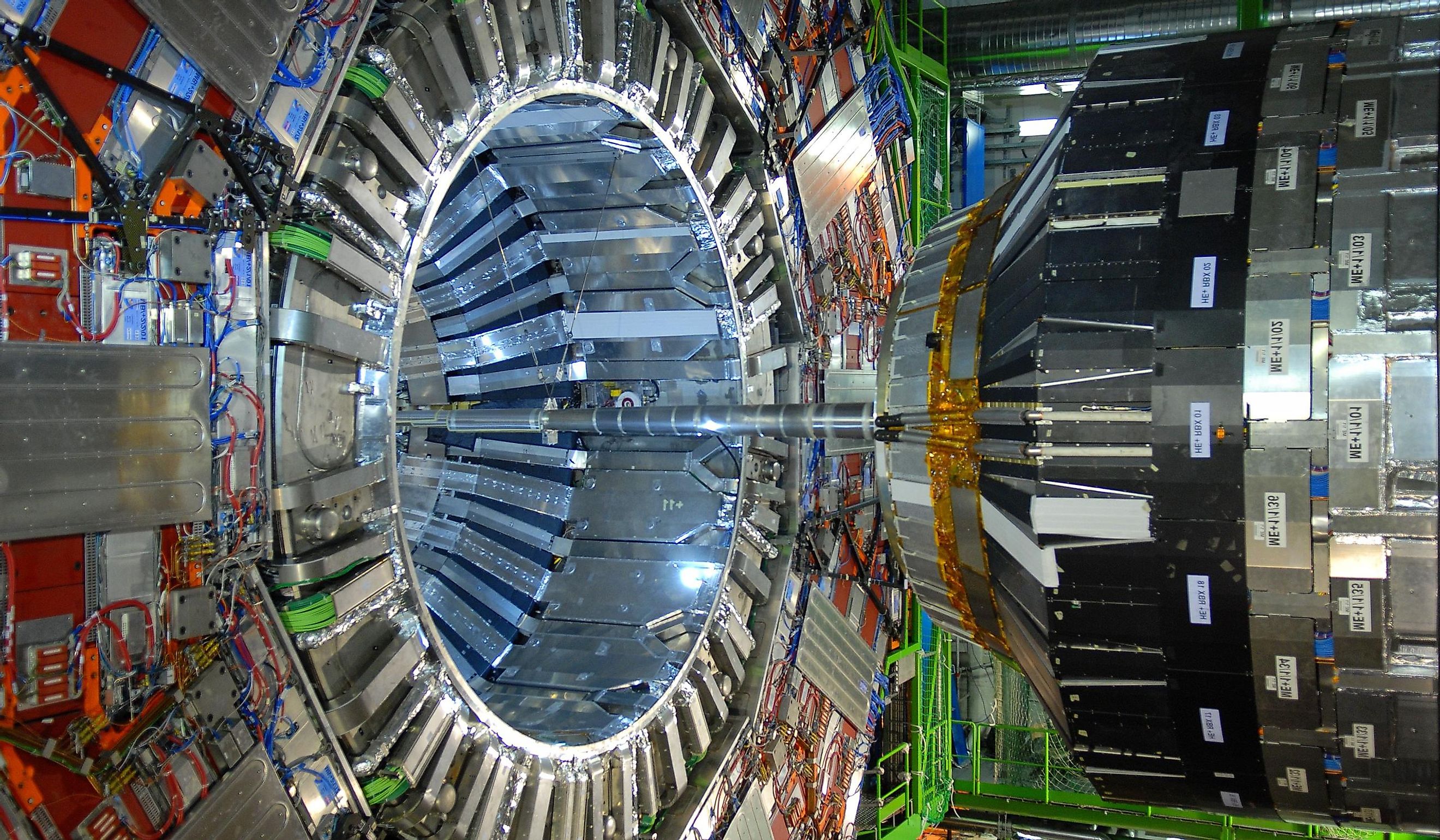

Particle Accelerators (1929)

Human curiosity took us closer and closer to looking inside things to find molecules, atoms, and then particles. In 1927, German physicist Max Steenbeck theorized particle accelerators for the first time while still a student at Kiel. While M. Steenbeck was discouraged at the time, Hungarian physicist Leo Szilárd filed patent applications for several types of particle accelerators in late 1928 and early 1929, and in 1930 Ernest O. Lawrence constructed one.

Cyclotrons were the most powerful and useful particle accelerator technology until the 1950s. They force particles like a neutron through a circular patch with a powerful magnetic field, reaching speeds close to the speed of light to hit another particle and allowing scientists to observe novel interactions between them. In essence, large accelerators are for exploring unknowns in particle physics.

The largest accelerator currently active is the Large Hadron Collider (LHC) near Geneva, Switzerland. The European Organization for Nuclear Research, or CERN, performs numerous experiments with multiple international collaborations. Regarding impact, this essential research center led to the discovery of the Higgs boson in 2012 and, oddly, the creation of the World Wide Web in 1989.

Nuclear Fission (1938)

German chemists Otto Hahn and Fritz Strassmann discovered nuclear fission on December 19th, 1938, by experimenting with the nuclei of atoms. Subsequently, physicist Lise Meitner and her nephew Otto Robert Frisch explained the experiment theoretically in January of 1939: a large atom like uranium-235, which has 92 protons and 143 neutrons, is hit by a fast-moving neutron. This collision turns it into uranium-236 for a short time, which then breaks into two smaller atoms. This chain reaction generates massive amounts of energy, which can power turbines in nuclear reactors or create devastating explosions. So, while Nuclear reactors are efficient and productive, nuclear weapons are a threat to all.

In essence, nuclear reactors meticulously control the chain reaction by lowering the reactor chamber with control rods and water, thus slowing down or halting the reaction. Yet, in weaponry, militaries urge that chain reaction to 'let loose' to achieve widescale destruction. Last, although human error and natural disasters have allowed nuclear reactors to create hazards, many believe the benefits outweigh the negatives, especially in comparison with the hazards of fossil fuels.

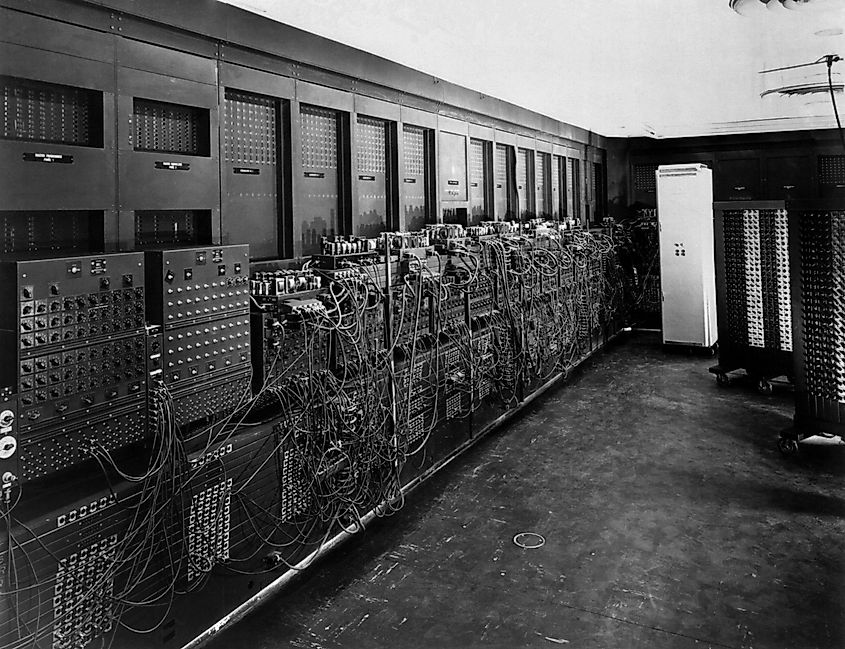

Computer Revolution (1945)

Computers are not just for emails — in theory, they compute, and that function alone is incredibly valuable. Ancient civilizations understood the value of rapid mathematics because math empowered them to build, measure, and navigate. For instance, the abacus was invented in 2400 BC and aided people in doing simple calculations. However, a crew of modern divers discovered an ancient device, the Antykitera mechanism, in a shipwreck. It dates back 2,200 years and is the first known geared analog computer in history.

Regarding the modern binary language of 1's and 0's, Clifford Berry and John Vincent Atanasoff invented the first digital computer in 1939. In 1943, a new machine called Colossus became the world's first electronic, digital, programmable computer. It used 1,500 vacuum tubes as valves, had paper-tape input, and could perform several operations — but it was not 'Turing-complete' (capable of any calculation given enough time). The first proper computer came to life in the US in 1945, the ENIAC. This monster of a machine took up all the space of a large room, yet it only had a tiny capacity for memory and calculation.

In the wake of 1947, transistors replaced vacuum tubes and sped up the speed of calculations. Nowadays, billions of transistors are packed into our smartphones and aid us in everyday tasks.

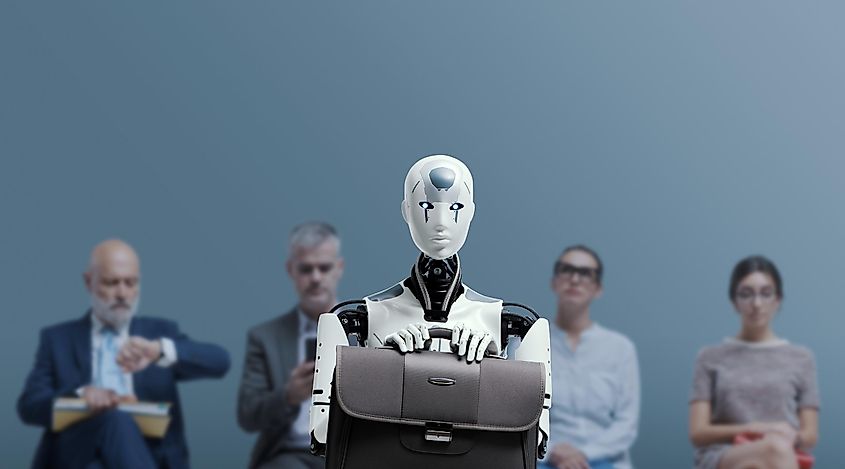

Artificial Intelligence (1956)

Artificial intelligence (AI) refers to any machine that can accomplish tasks that typically only a human can achieve. The difference is in the details: our organic intelligence is based on living cells (neurons) that work together in a rapid, adaptive network of information that allows us to make predictions and choices. Computers, being primarily algorithmic, do not usually have those strengths but easily outperform people in proficiencies like memorization and calculation. Historically, artificial intelligence as an academic field was founded in 1956, and the English mathematician Alan Turing was an early figure who studied the possibility that engineers might eventually design a truly 'intelligent' computer.

The complex algorithms used by search engines like Google, Amazon, and Netflix are modern examples of AI technology that learns users' preferences and tries to suggest the best result or product for them. Creative tools like the artistic DALL·E 3 or Chat GPT from Open AI are the latest developments of machine learning AI. In essence, Machine learning allows advanced AI models to form knowledge by being fed large amounts of information. This process often mimics the structure and efficiency of neurons in human brains, albeit significantly simpler, which is why professionals describe these computer systems as 'neural networks.'

Many experts are worried that Artificial Intelligence could overtake human intelligence and cause mayhem within society. Such a superintelligence might be very difficult to control and contain. Currently, AI scientists are considering ways to implement fail safes to prevent a disaster. The hope is that people will be able to use, or at least coexist, with AIs; the benefits of achieving an advanced AI might look like discovering cures to complex illnesses or automating difficult labor. Automated vehicle driving, AI medical diagnoses, and prediction of disasters could save a lot of lives. However, these major benefits come with questions of ethical use and social stability.

Space Exploration (1957)

Human curiosity causes us to question the boundaries and limits of what our ancestors believed to be impossible. However, the power of Earth's gravity is one boundary that was impossible to argue with until the last century. The first rocket that managed to get into space was the V2 missile, which was first launched by Germany in 1944. Then, a heated competition erupted between the United States and the Soviet Union. This 'Space Race' resulted in the delivery of the first artificial satellite, Sputnik 1, into Earth orbit in 1957. Just four years later, the Soviets also managed the first successful human spaceflight, which carried a young Russian cosmonaut named Yuri Gagarin beyond Earth's atmosphere. In a grand finale, after many unsuccessful attempts, Apollo 11 successfully carried Commander Neil Armstrong, Buzz Aldrin, and Michael Collins to the Moon in 1969, where they even walked. N. Armstrong became the first person to step onto the Moon on July 21st, 1969. Since then, humanity has also celebrated the findings of the orbiting Hubble and James Web telescopes, released in 1990 and 2022, respectively.

As far as long-term human habitation in space is concerned, there are currently two occupied space stations looping around Earth. Besides the Tiangong Space Station that was launched in 2021, the International Space Station (ISS) is the largest modular space station in low Earth orbit. The ISS was a joint venture involving the United States' NASA, Russia's Roscosmos, Japan's JAXA, Europe's ESA, and Canada's CSA. The first ISS component was launched in 1998, and it has been operated and maintained ever since. The station serves as a space environment and laboratory in microgravity for research in astrobiology, astronomy, meteorology, physics, and other fields. During their stays, Astronauts take turns in expeditions that last up to six months. As of writing, 69 long-duration expeditions have been completed, and over 250 astronauts, cosmonauts, and space tourists from 20 different nations have visited the space station.

Modern Medicine

After the improbable advent of penicillin in 1928 by Alexander Fleming, which shook medicine to its core, humanity saw great medical advancements thanks to rampant technological revolution. Prior to 1800, life expectancy was below 40 years, and today it is over 80.

Factors that contributed to that amazing jump include research into nuclear magnetic resonance, which was first discovered and described by Isidor Rabi in 1938. This physical phenomenon consists of stimulating an atom's nucleus with a magnetic field and led to an incredible medical application, magnetic resonance imaging (MRI). This incredible breakthrough was published in 1973 and allowed the first working MRI machine to be built and tested on a mouse in 1974, reaching full production in the 1980s. Countless millions of lives have been saved by MRI's ability to detect issues related to cancer, strokes, and more.

Another recent and important medical advancement is the CRISPR-Cas9 genome editing technique. Interestingly, the discovery occurred independently in three parts of the world. The first was made by researcher Yoshizumi Ishino and his colleagues in 1987. Ishino and his team accidentally copied part of a DNA sequence, which hinted at the potentiality of changing DNA intentionally. CRISPR-Cas9 is the latest rendition of this technique, further refining and simplifying the process and effectiveness. As a de facto gene editing tool, it has already been proved that it can be used to repair defective DNA in mice, effectively curing them of genetic disorders. In other words, diseases that have plagued people for thousands of years may go extinct if those diseases are targeted before each person is born. There are, however, reasonable ethical worries about its use, such as creating genetically engineered 'designer children.' Irresponsible application might result in societal class divisions, horrific accidents, or worse.

Future Technology

Thirty years ago, it would have been difficult to imagine calling someone across the world and enjoying a video call from anywhere at all. This rapid progress raises the question, "How much more will technology advance after thirty more years?" Clues and signs point us in the right direction:

Quantum Computers: Computers get more powerful each year, and chips get smaller, but soon, we might hit a wall that could slow things down. The smallest parts involved, transistor gates, would be difficult or impossible to produce at less than a nanometer long, and currently, they are three nanometers long. Transistors can be seen as simple on or off switches smaller than 3 nanometers nowadays. Luckily, a new technology is currently in the works: quantum computing. This new breakthrough stems from research into the field of quantum mechanics. The simplest explanation is that the current method uses "bits," which carries information stored as either 0s or 1s. The new unit of information is "qubits," which can be 0 and 1 at the same time! This could drastically increase computing power and data storage, but we are currently in the early years of this technology.

Nuclear Fusion: Contrary to nuclear fission, nuclear fusion does not split atoms by shooting particles at them. Instead, fusion pushes atoms together into fusion. This process also produces an enormous amount of energy and is theoretically more efficient and sustainable than a nuclear fission power plant. However, this contraption is not nearly as simple as fission; the device requires a temperature seven times hotter than the sun. Unfortunately, constructing an environment capable of withstanding these conditions is slow and extremely expensive. So far, fusion reaction experiments have cost more energy than they create, and it will take time to develop a prototype that can produce energy constantly and efficiently. On the other hand, a fully functioning fusion reactor is synonymous with a new 'industrial revolution' because it equates to cheap, safe, unlimited energy.

Lab-Grown Edible Meat: Cultured meat is another long-awaited breakthrough. Jason Matheny brought the concept to the public attention in the early 2000s after he co-authored a paper. The theoretical possibility of growing meat in a factory rather than a farm has been of interest since at least 1931 when Winston Churchill wrote: "We shall escape the absurdity of growing a whole chicken to eat the breast or wing, by growing these parts separately under a suitable medium." Fundamentally, the process involves taking a small sample of meat from donor livestock and growing it endlessly in a controlled environment. Although this was already possible in 2013, the time and cost to optimize production is no small matter. Years ago, a regular-sized synthetic hamburger once cost $330,000. Currently, that cost has dropped to about $9.80 per burger. While production costs are falling rapidly, there is still room for improvement. The price is still 3 to 5 times higher than a grass-fed burger, and growing enough to satisfy high demand is going to take time.

Humans are the result of an ongoing evolution that has continued for billions of years, and there is no sign of this stopping. However, the past hundred thousand years reveal that humans now progress technologically instead of genetically. We have shaped our tools and our clothes, and we have defined our cultures with our languages and traditions. As we stopped moving to follow the migrating food, we settled on building and farming. We created stable communities and assigned specialized roles amongst ourselves. We have explored our world and looked beyond it. Our progress has brought us to an unnerving edge with powerful discoveries and inventions that could either bless the Earth or scar it.